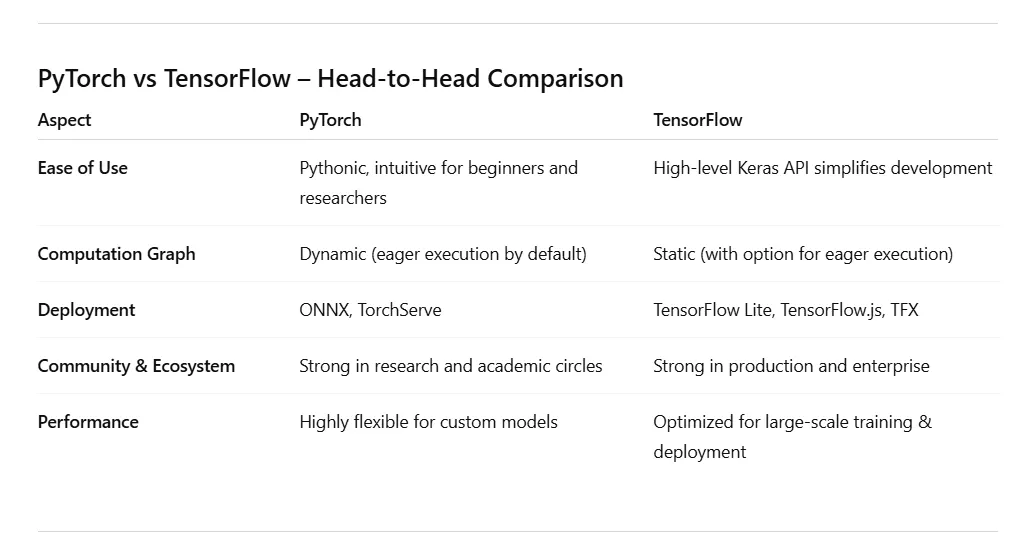

The AI and machine learning ecosystem has grown rapidly, with PyTorch and TensorFlow emerging as two of the most widely adopted frameworks. Choosing between them depends on project requirements, developer expertise, ecosystem compatibility, and deployment goals. This blog provides a deep dive into both frameworks, their strengths, weaknesses, and the ideal scenarios where each should be used.

Throughout this discussion, we will also explore insights from AI Orbit Labs, a leader in building advanced AI systems.

Why Choosing the Right Framework Matters

The choice of framework affects:

- Development Speed — Rapid prototyping and experimentation require flexible tools.

- Performance — Model training and inference efficiency can vary significantly.

- Deployment Flexibility — Some frameworks provide more robust support for mobile, web, or edge devices.

- Ecosystem & Community — Libraries, tutorials, and community support drive faster learning and troubleshooting.

PyTorch: A Research-Friendly Framework for Rapid Experimentation

1. Overview of PyTorch

PyTorch, developed by Facebook’s AI Research (FAIR) team, is known for its dynamic computation graph and intuitive Pythonic interface. It became the preferred choice among researchers due to its flexibility and ease of debugging.

Key advantages of PyTorch:

- Dynamic Computation Graphs — Enables real-time modifications, perfect for research and experimentation.

- Pythonic Design — Feels like writing standard Python code, making it beginner-friendly.

- Growing Production Support — With TorchServe and ONNX, PyTorch now excels in deployment scenarios as well.

- Strong Community — A huge ecosystem of pretrained models and libraries like torchvision, torchaudio, and torchtext.

2. When to Choose PyTorch?

- Research & Prototyping — Ideal for AI research labs, universities, and experimental model development.

- Custom Model Architectures — Perfect when working with non-standard neural network designs.

- Natural Language Processing (NLP) — Hugging Face’s Transformers library is deeply integrated with PyTorch.

- Computer Vision & Generative AI — StyleGAN, Diffusion Models, and other creative applications thrive on PyTorch.

For more insights on experimental AI development, explore AI Orbit Labs’ AI Agents Project, which demonstrates cutting-edge approaches to AI automation.

3. PyTorch Code Example — Image Classification

Below is a minimal PyTorch implementation for an image classifier using a simple neural network:

import torch

import torch.nn as nn

import torch.optim as optim

import torch.nn.functional as F

from torchvision import datasets, transforms

# Data transformation

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.5,), (0.5,))

])

# Load dataset

train_data = datasets.MNIST(root='./data', train=True, download=True, transform=transform)

train_loader = torch.utils.data.DataLoader(train_data, batch_size=64, shuffle=True)

# Define neural network

class SimpleNN(nn.Module):

def __init__(self):

super(SimpleNN, self).__init__()

self.fc1 = nn.Linear(28*28, 128)

self.fc2 = nn.Linear(128, 64)

self.fc3 = nn.Linear(64, 10)

def forward(self, x):

x = x.view(x.shape[0], -1)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return F.log_softmax(x, dim=1)

# Initialize model, loss function, optimizer

model = SimpleNN()

criterion = nn.NLLLoss()

optimizer = optim.Adam(model.parameters(), lr=0.003)

# Training loop

for epoch in range(5):

for images, labels in train_loader:

optimizer.zero_grad()

log_ps = model(images)

loss = criterion(log_ps, labels)

loss.backward()

optimizer.step()

print("Training Complete")

This simple yet effective script demonstrates PyTorch’s ease of use for quick prototyping, particularly in AI-powered computer vision projects.

TensorFlow: The Production Powerhouse

1. Overview of TensorFlow

TensorFlow, developed by Google Brain, is a comprehensive ecosystem for machine learning and deep learning. It supports not just model building but also deployment across web, mobile, and edge devices, making it ideal for large-scale production environments.

Key strengths of TensorFlow:

- Static Computation Graphs — Provides performance optimization at the cost of flexibility.

- TensorFlow Lite & TensorFlow.js — Seamless deployment to mobile and web platforms.

- Keras API Integration — A high-level API for rapid model building with minimal code.

- Distributed Training — Optimized for multi-GPU and TPU training for large-scale projects.

2. When to Choose TensorFlow?

- Production Deployment — Ideal for enterprise AI applications that require scalability.

- Mobile & Edge AI — TensorFlow Lite enables lightweight models for resource-constrained environments.

- Cross-Platform ML — Integration with TensorFlow.js allows models to run in browsers.

- Pre-Trained Models & APIs — Access to TensorFlow Hub and Google Cloud AI for faster development.

For enterprise-scale AI insights, explore AI Orbit Labs’ AI-Powered HR Recruitment System showcasing TensorFlow-powered solutions.

3. TensorFlow Code Example — Image Classification:

import tensorflow as tf

from tensorflow.keras import layers, models

from tensorflow.keras.datasets import mnist

# Load dataset

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

# Build model

model = models.Sequential([

layers.Flatten(input_shape=(28, 28)),

layers.Dense(128, activation='relu'),

layers.Dense(64, activation='relu'),

layers.Dense(10, activation='softmax')

])

# Compile model

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# Train model

model.fit(x_train, y_train, epochs=5, validation_data=(x_test, y_test))

print("Training Complete")

TensorFlow’s Keras API makes high-level model building seamless and production-ready, as seen in projects like AI Orbit Labs’ Multilingual Voice Agent.

Conclusion: Which Should You Choose?

- Choose PyTorch if you are focusing on research, prototyping, or experimental model development where flexibility is key.

- Choose TensorFlow if your priority is production deployment, scalability, and support for mobile/web platforms.

In reality, many AI teams use both frameworks, starting with PyTorch for early experiments and transitioning to TensorFlow for deployment.

For advanced AI solutions, visit AI Orbit Labs and explore our blog for more technical insights.